DiFlow-TTS:

Compact and Low-Latency Zero-Shot Text-to-Speech with Factorized Discrete Flow Matching

Abstract. Despite flow matching and diffusion models having emerged as powerful generative paradigms that advance zero-shot text-to-speech (TTS) systems in continuous settings, they continue to fall short in capturing high-quality speech attributes such as naturalness, similarity, and prosody. A key reason for this limitation is that continuous representations often entangle these attributes, making fine-grained control and generation more difficult. Discrete codec representations offer a promising alternative, yet most flow-based methods embed tokens into a continuous space before applying flow matching, diminishing the benefits of discrete data. In this work, we present DiFlow-TTS, which, to the best of our knowledge, is the first model to investigate discrete flow matching directly to generate high-quality speech from discrete inputs. Leveraging factorized speech attributes, DiFlow-TTS introduces a factorized flow prediction mechanism that simultaneously predicts prosody and acoustic detail through separate heads, enabling explicit modeling of aspect-specific distributions. Experimental results demonstrate that DiFlow-TTS delivers strong performance across several metrics, while maintaining a compact model size up to 11.7 times smaller and low-latency inference that generates speech up to 34 times faster than recent state-of-the-art baselines.

Contents

Note- DiFlow-TTS (ours): All audio samples on this demo page were generated by DiFlow-TTS (NFE=128), trained on 470 hours of the LibriTTS dataset.

- MaskGCT: All audio samples on this demo page were generated using the official code and a pre-trained checkpoint, trained on English and Chinese data from Emilia, each with ~50K hours (≈100K hours total).

- VoiceCraft: All audio samples on this demo page were generated using the official code and a pre-trained checkpoint, trained on 9K hours of the GigaSpeech dataset.

- NaturalSpeech 2: All audio samples on this demo page were generated using the Amphion toolkit and a pre-trained checkpoint, trained on 585 hours of the LibriTTS dataset.

- VALL-E: All audio samples on this demo page were reproduced using the Amphion toolkit on 500 hours of the LibriTTS dataset.

- F5-TTS: All audio samples on this demo page were reproduced using the official code and trained on 500 hours of the LibriTTS dataset.

- OZSpeech: All audio samples on this demo page were generated using the official code and a pre-trained checkpoint, trained on 500 hours of the LibriTTS dataset.

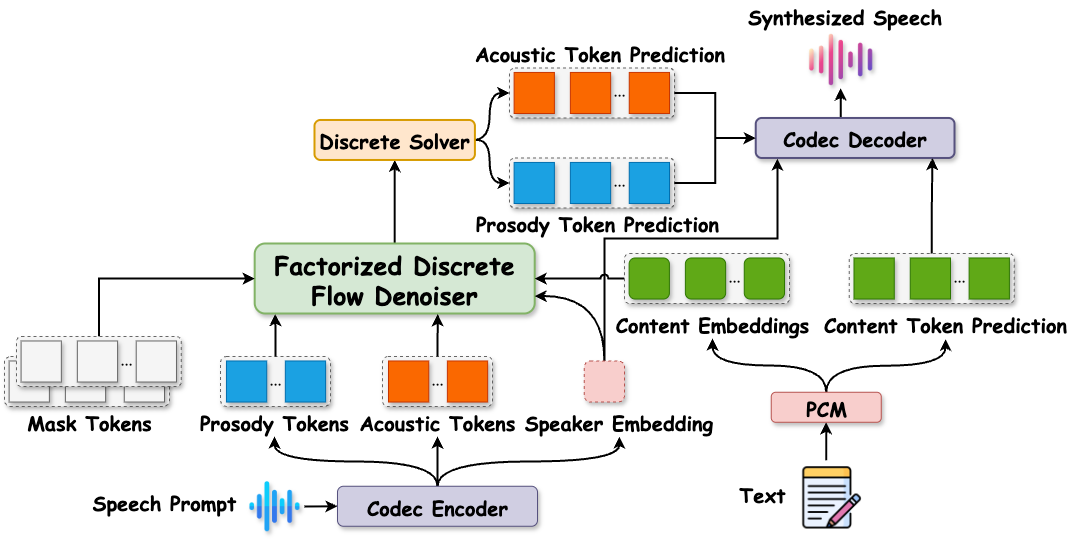

Model Overview

Figure 1. Overview of DiLow-TTS. The model decomposes the speech prompt into timbre, prosody, and acoustic tokens using a codec encoder. Input text is processed by PCM to generate content tokens and embeddings. The Factorized Discrete Flow Denoiser generates prosody, and acoustic tokens conditioned the content embeddings, speaker embedding, and the discrete prosody and acoustic tokens derived from speech prompt. A codec decoder reconstructs the final waveform.

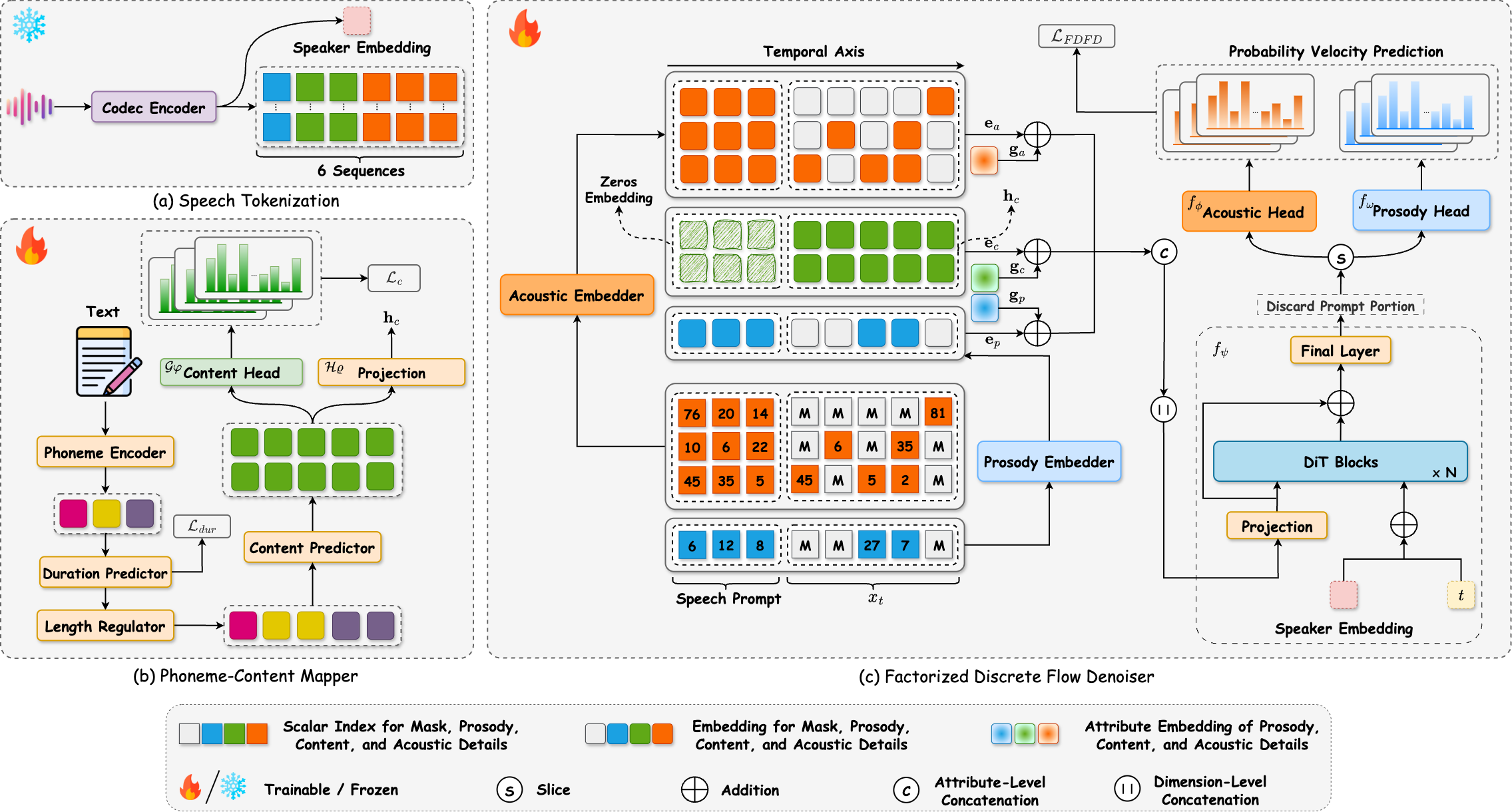

Detailed Model

Figure 2. The detailed components of DiFlow TTS. The architecture consists of three main components: (a) Speech Tokenization, which extracts discrete tokens and a speaker embedding from a raw speech; (b) Phoneme-Content Mapper (PCM), which maps input phonemes to discrete content tokens and generates the corresponding content embeddings; and (c) Factorized Discrete Flow Denoiser (FDFD), which performs discrete flow matching conditioned on the content embeddings, speaker embedding, and the discrete prosody and acoustic tokens derived from the reference speech prompt.

Zero-shot TTS (Celebrities)

DiFlow-TTS is capable of mimicking celebrity voices. The following examples are provided strictly for research purposes.

| Celebrity | Target Transcript | Prompt | DiFlow-TTS (ours) |

|---|---|---|---|

| Donald Trump | But to those who knew her well, it was a symbol of her unwavering determination and spirit. | From Spark-TTS's Demo |

|

| Optimus Prime | I don't really care what you call me. I've been a silent spectator, watching species evolve, empires rise and fall. But always remember, I am mighty and enduring. Respect me and I'll nurture you; ignore me and you shall face the consequences. | From DiTTo-TTS's Demo |

|

| Benedict Cumberbatch | The best love is the kind that awakens the soul and makes us reach for more, that plants a fire in our hearts and brings peace to our minds. And that's what you've given me. That's what I'd hoped to give you forever. | From DiTTo-TTS's Demo |

|

| Mark Zuckerberg | It is our choices that show what we truly are, far more than our abilities. | From DiTTo-TTS's Demo |

Zero-shot TTS (LibriSpeech)

All speakers are unseen during training. The audio samples are drawn from the LibriSpeech test-clean dataset, using audio prompt lengths of 1s, 3s, and 5s.

| Prompt Duration | Target Transcript | Prompt | DiFlow-TTS (ours) | MaskGCT | VoiceCraft | NaturalSpeech 2 | VALL-E | F5-TTS | OZSpeech |

|---|---|---|---|---|---|---|---|---|---|

| 1 second | therefore her majesty paid no attention to anyone and no one paid any attention to her. | ||||||||

| he often stopped to examine the trees nor did he cross a rivulet without attentively considering the quantity the velocity and the color of its waters. | |||||||||

| as used in the speech of everyday life the word carries an undertone of deprecation. | |||||||||

| i stood with my back to the wall for i wanted no sword reaching out of the dark for me. | |||||||||

| i wanted nothing more than to see my country again my friends my modest quarters by the botanical gardens my dearly beloved collections. | |||||||||

| 3 seconds | as soon as these dispositions were made the scout turned to david and gave him his parting instructions. | ||||||||

| in both these high mythical subjects the surrounding nature though suffering is still dignified and beautiful. | |||||||||

| the meter continued in general service during eighteen ninety nine and probably up to the close of the century. | |||||||||

| that is the best way to decide for the spear will always point somewhere and one thing is as good as another. | |||||||||

| there came upon me a sudden shock when i heard these words which exceeded anything which i had yet felt. | |||||||||

| 5 seconds | after proceeding a few miles the progress of hawkeye who led the advance became more deliberate and watchful. | ||||||||

| he had preconceived ideas about everything and his idea about americans was that they should be engineers or mechanics. | |||||||||

| as used in the speech of everyday life the word carries an undertone of deprecation. | |||||||||

| so no tales got out to the neighbors besides it was a lonely place and by good luck no one came that way. | |||||||||

| as soon as these dispositions were made the scout turned to david and gave him his parting instructions. |

Emotion

DiFlow performs reasonably well in mimicking emotions from reference speech prompts, even though it was mainly trained on neutral emotional data.

| Emotion | Target Transcript | Prompt | DiFlow-TTS (ours) |

|---|---|---|---|

| Angry | You said you'd always be there, but now I'm standing here alone! | ||

| Disgust | |||

| Happy | |||

| Sad | |||

| Calm |